rsnapshot is a utility that uses rsync to backup files locally or it can backup files from a remote server.

While trying to figure out a good solution for backing up an Ubuntu Server I decided to try rsnapshot, however since it can either create a local backup or pull a remote backup it needs to be configured to do that on the backup server side. It does not “push” a backup to a backup server.

Some helpful snippits from the man file.

rsnapshot will typically be invoked as root by a cron job, or series of cron jobs. It is possible, however, to run as any arbitrary user with an alternate configuration file.

...

USAGE

rsnapshot can be used by any user, but for system-wide backups you will probably want to run it as root.

...

NOTES

Make sure your /etc/rsnapshot.conf file has all elements separated by tabs. See

/usr/share/doc/rsnapshot/examples/rsnapshot.conf.default.gz for a working example file.

Make sure you put a trailing slash on the end of all directory references. If you don't, you may have extra directories created in your snapshots. For more information on how the trailing slash is handled, see the rsync(1) manpage.

Overview

Scenario

Host A runs xyz application and host B is the backup server. We create a backup user on host A, host B then uses that user to ssh and rsync backups to itself.

- Create backup user

- Configure rysnc to be used without a password

- Setup SSH Key, aka Passwordless authentication (On backup server)

- Setup rsnapshot config (On backup server)

- Configure rsnapshot in crontab (On backup server)

- Final Testing

Create backup user

The following commands are fairly straight forward. Change backupuser to whatever you want to call your backup user.

sudo useradd -m backupuser

passwd backupuser

sudo usermod -a -G sudo backupuser

Configure rysnc to be used without a password

We need to setup the backup user to be able to use “sudo rsync” without having to input the user password. If we don’t use sudo we can’t access system files for backups. And if we have to manually input the password every time rsync runs, then the backups would not be automatic. The following link was helpful.

https://unix.stackexchange.com/questions/325100/proper-way-to-set-up-rsnapshot-over-ssh

All we need to do is create a file in /etc/sudoers.d/username and then tell it we don’t need to enter a password when “sudo rsync” is run.

sudo tee /etc/sudoers.d/backupuser <<<'backupuser ALL = (root) NOPASSWD: /usr/bin/rsync'

Setup SSH Key, aka Passwordless authentication (On backup server)

Log into the backup server

Create SSH keys. Note that since rsnapshot wants to run as root, we create the key and copy it as the root user.

sudo ssh-keygen

Accept all the defaults so we can login from the backup server without having to enter in a password.

Copy ssh key to the server we are wanting to back up

sudo ssh-copy-id backupuser@ip

enter in the password and the the key should get copied it over. Once complete, verify that you can login without having to enter in a password.

Setup rsnapshot config (On backup server)

Open up the rsnapshot config file and modify where appropriate. /etc/rsnapshot.conf

Change the path to where the snapshots are stored. By default it stores them under /.snapshots. I moved it under a local user as I am not needing to use rsnapshot to backup the local backup server files.

# SNAPSHOT ROOT DIRECTORY

snapshot_root /home/user/rsnapshot/snapshots/

Add a daily backup option under Backup levels

# BACKUP LEVELS / INTERVAL #

retain daily 6

Setup remote server to get a backup from. Replace ipaddress and directories as needed. hostname is the sever name. You can change to whatever you want.

### BACKUP POINTS / SCRIPTS ###

# LOCALHOST

# Comment or delete entries unless you want to backup those as well

# EXAMPLE.COM

backup backupuser@ipaddress:/home/ hostname/ +rsync_long_args=--rsync-path="sudo rsync"

If you would like to back up multiple locations you can create multiple entries with different remote paths. Example locations to add

backup backupuser@ipaddress:/etc/ hostname/ +rsync_long_args=--rsync-path="sudo rsync"

backup backupuser@ipaddress:/usr/local/ hostname/ +rsync_long_args=--rsync-path="sudo rsync"

Verify that the config is good with

sudo rsnapshot configtest

It should return Syntax OK

Setup Crontab

sudo crontab -e

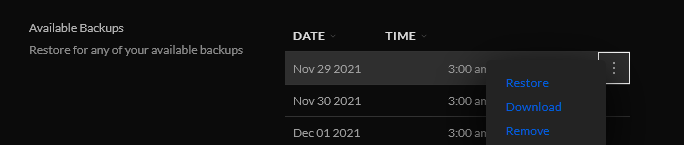

Add the following line to run rsnapshot at 3AM every day. More information about crontab can be found here.

0 3 * * * /usr/bin/rsnapshot daily

Final Testing

Manually run a backup to verify everything is set up correctly.

sudo rsnapshot daily

After it runs you can check the directory you specified in the config file to verify that the files did get copied.