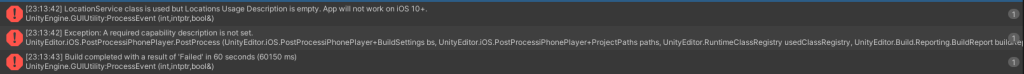

Ran into an interesting problem while trying to compile a Unity project for iOS. It failed to build because

“LocationService class is used but Locations Usage Description is empty. App will not work on iOS 10+.

UnityEngine.GUIUtility:ProcessEvent (int,intptr,bool&)”

Well as far as I know there is not anything calling or requesting location services. I probably could have just put a reason for requesting the service under the build options in Project Settings. But why “use” something we don’t need?

Using the handy grep tool from a terminal, I was able to search through the project for “LocationService” and that returned a couple hits for files in the PlayMaker folder.

Looks like PlayMaker has a couple preconfigured “scripts” for Starting, Stopping, and Requesting Location.

Deleting the following four C# files resolved the error and it built fine afterwards.

GetLocationInfo

StopLocationServiceUpdates

StartLocationServiceUpdates

ProjectLocationToMap

You can find these files in your project under Assets > PlayMaker > Actions > Device